Coupling Machine Learning to Fortran using the FTorch Library

2025-07-09

Precursors

Slides and Materials

The material for this workshop, including a link to the slides to follow on your own device, can be found at: github.com/Cambridge-ICCS/FTorch-workshop

Licensing

Except where otherwise noted, these presentation materials are licensed under the Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) License.

Vectors and icons by SVG Repo under CC0(1.0) or FontAwesome under SIL OFL 1.1

Preparation

Codespaces

In this tutorial, we will be using GitHub Codespaces to run the exercises. If you are not familiar with Codespaces, please refer to the Codespaces documentation for more information.

- Navigate to the FTorch-workshop repo page repository and click on the green “Code” button. NOTE: Make sure you open a Codespace for

FTorch-workshop, notFTorch. - Select “Codespaces” and then “Create codespace on main”.

- Wait for the codespace to be created and opened in your browser. You will be put in a VSCode environment with a terminal at the bottom.

Note that we have set up the codespace to have a Python virtual environment set up and activated for you.

Motivation

Machine Learning in Science

We typically think of Deep Learning as an end-to-end process;

a black box with an input and an output1.

Who’s that Pokémon?

Who’s that Pokémon?

\[\begin{bmatrix}\vdots\\a_{23}\\a_{24}\\a_{25}\\a_{26}\\a_{27}\\\vdots\\\end{bmatrix}=\begin{bmatrix}\vdots\\0\\0\\1\\0\\0\\\vdots\\\end{bmatrix}\] It’s Pikachu!

Neural Net by 3Blue1Brown under fair dealing.

Pikachu © The Pokemon Company, used under fair dealing.

Machine Learning in Science

Neural Net by 3Blue1Brown under fair dealing.

Pikachu © The Pokemon Company, used under fair dealing.

Challenges

- Reproducibility

- Ensure net functions the same in-situ

- Re-usability

- Make ML parameterisations available to many models

- Facilitate easy re-training/adaptation

- Language Interoperation

Language interoperation

Many large scientific models are written in Fortran (or C, or C++).

Much machine learning is conducted in Python.

![]()

![]()

Mathematical Bridge by cmglee used under CC BY-SA 3.0

PyTorch, the PyTorch logo and any related marks are trademarks of The Linux Foundation.”

TensorFlow, the TensorFlow logo and any related marks are trademarks of Google Inc.

Efficiency

We consider 2 types:

Computational

Developer

Both affect ‘time-to-science’.

- Don’t rewrite nets after you have already trained them.

- Not all scientists are computer scientists.

- Software should be simple to learn and deploy.

- May not have access to extensive software support.

- HPC environments want minimal additional dependencies.

- Needs to be as efficient as possible.

How it Works

Torch

PyTorch

- an open-source deep-learning framework

- developed by Meta AI, now part of the Linux Foundation

- written in C++ with a Python interface

- port of Torch (ATen), but also includes Caffe2 etc.

libtorch

- A (Pythonic-ish) C++ interface to the underlying code.

- Ability to save and read PyTorch models (and weights) through TorchScript

- Fortran can bind to this using the

iso_c_bindingmodule (intrinsic since 2003). - Utilising shared memory (on CPU) reduces data transfer overheads.

Torch and PyTorch logos under Creative Commons

FTorch

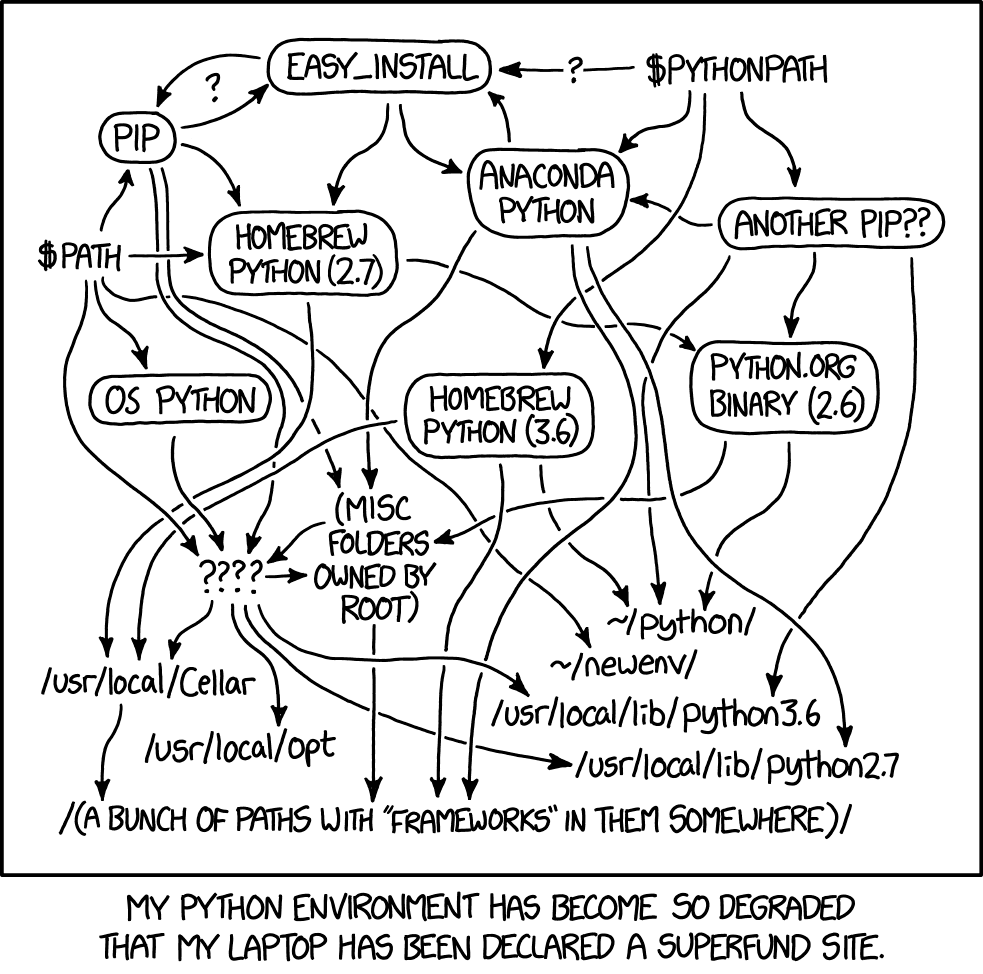

Python

env

Python

runtime

xkcd #1987 by Randall Munroe, used under CC BY-NC 2.5

Further Information

For more details on the development see slides from our recent talk here: jackatkinson.net/slides/Oxford-FTorch/

Or join the upcoming (18th July) seminar here: N8 Leeds Seminar

Time for the practical

Exercise 0 – Hello Fortran and PyTorch!

Question: What are people’s experience levels with and use cases of Fortran and PyTorch?

Navigate to exercises/exercise_00/ where you will see both Python and Fortran files.

Python

hello_pytorch.py defines a net SimpleNet that takes an input vector of length 5 and multiplies it by 2.

Note:

- the

nn.Moduleclass - the

forward()method

Running:

should produce the output:

Compilers and CMake

First we will check that we have Fortran, C and C++ compilers installed:

Running:

Should produce output similar to:

Fortran

The file hello_fortran.f90 contains a program to take an input array and call a subroutine to multiply it by two before printing the result.

The subroutine is contained in a separate module math_mod.f90, however.

We can compile both of these to produce .o object files and a .mod module files using:

We then link these together into an executable ftn_prog using:

Installing FTorch

FTorch source code

The source code for FTorch is contained in the src/ directory. This contains:

ctorch.cpp- Bindings to the libtorch C++ API.ctorch.h- C header file to bind to from Fortran.ftorch.F90- the Fortran routines we will be calling.

These are compiled, linked together, and installed using CMake.

- Simplify build process for users.

- Accomodate different machines and setups.

- Configured in the

CMakeLists.txtfile.

Build FTorch

This is all handled by the build_FTorch.sh script in the Codespace.

To keep things clean we will do all of our building from a self-contained build/ directory.

We can now execute CMake to build here using the code in the above directory:

Build FTorch - details

In reality we often need to specify a number of additional options:

cmake .. -DCMAKE_BUILD_TYPE=Release \

> -DCMAKE_Fortran_COMPILER=gfortran \

> -DCMAKE_C_COMPILER=gcc \

> -DCMAKE_CXX_COMPILER=g++ \

> -DCMAKE_PREFIX_PATH=<path/to/libtorch/> \

> -DCMAKE_INSTALL_PREFIX=<path/to/install/ftorch/> \

> -DCMAKE_GPU_DEVICE=<NONE|CUDA|XPU|MPS> \

> ...

Notes:

- The Fortran compiler should match that being used to build your code.

- We need gcc >= 9.

- Set build type to

Debugfor debugging with-g. - Prefix path is wherever libtorch is on your system.

- Install prefix can be set to anywhere. Defaults may require root/admin access.

Build FTorch - details

Assuming everything proceeds successfully CMake will generate a Makefile for us.

We can run this locally using1:

If we specified a particular location to install FTorch we can do this by running2:

The above two commands can be combined into a single option using:

Build FTorch - details

Installation will place files in CMAKE_INSTALL_PREFIX/:

include/contains header and mod files.lib/contains cmake and library files.- This could be called

lib64/on some systems. - UNIX will use

.sofiles whilst Windows has.dllfiles.

- This could be called

Exercises

Exercise 1

Now that we have FTorch installed on the system we can move to writing code that uses it, needing only to link to our installation at compile and runtime.

We will start of with a basic example showing how to couple code in Exercise 1:

- Design and train a PyTorch model.

- Save PyTorch model to TorchScript.

- Write Fortran using FTorch to call saved model.

- Compile and run code, linking to FTorch.

PyTorch

Examine exercises/exercise_01/simplenet.py.

This contains a contrived PyTorch model with a single nn.Linear layer that will multiply the input by two.

With our virtual environment active we can test this by running the code with1:

Input: tensor([0., 1., 2., 3., 4.])

Output: tensor([0., 2., 4., 6., 8.])Offline training with FTorch

Saving to TorchScript

To use the net from Fortran we need to save our PyTorch net as TorchScript in a .pt file.

FTorch comes with a handy utility pt2ts.py to help with this located at

FTorch/utils/pt2ts.py.

We have copied a version of this into exercise_01/, ready for you to modify and run to save our SimpleNet model to TorchScript.

Saving to TorchScript

Notes:

- There are handy

TODOcomments where you need to adapt the code. pt2ts.pyexpects annn.Modulesubclass with aforward()method.- there are two options to save:

- tracing using

torch.jit.trace()

This passes a dummy tensor throgh the model recording operations.

It is the simplest approach. - scripting using

torch.jit.script()

This converts Python code directly to TorchScript.

It is more complicated, but neccessary for advanced features and/or control operations.

- tracing using

- A summary of the TorchScript model can be printed from Python.

Offline training with FTorch

Calling from Fortran

We are now in a state to use our saved TorchScript model from within Fortran.

exercises/exercise_01/simplenet_fortran.f90 contains a skeleton code with a Fortran arrays to hold input data for the net, and the results returned.

We will modify it to create the neccessary data structures and load and call the net.

- Import the

ftorchmodule. - Create

torch_tensorsand atorch_modelto hold the data and net. - Map Fortran data from arrays to the

torch_tensors. - Call

torch_model_forwardto run the net. - Clean up.

Calling from Fortran

Notes:

- See the solution file

simplenet_fortran_sol.f90for an ideal code. - for more information on the subroutines and API see the online API documentation

Building the code

Once we have modified the Fortran we need to compile the code and link it to FTorch.

For convenience, this can be done in the codespace from the exercise_01/ subdirectory as follows:

The path to the FTorch installation will be picked up automatically so doesn’t need to be specified.

Running the code

To run the code we can use the generated executable:

0.00000000 2.00000000 4.00000000 6.00000000 8.00000000Exercise 2: Larger code considerations

What we have considered so far is a simple contrived example designed to teach the basics.

However, in reality the codes we will be using are more complex, and full of terrors.

- We will be calling the net repeatedly over the course of many iterations.

- Reading in the net and weights from file is expensive.

- Don’t do this at every step!

Exercise 2: Larger code considerations

In exercise 2 we will look at an example of how to ideally structure a slightly more complex code setup. For those familiar with climate models this may be nothing new. We make use of the traditional separation into subroutines for:

- Initialisation

- Updating

- Finalisation

Exercise 2

Navigate to the exercises/exercise_02/ directory.

Here you will see two code directories, good/ and bad/.

Both perform the same operation:

- Running the simplenet from example 1 10,000 times.

- Increment the input vector at each step.

- Accumulate the sum of the output vector.

Exercise 2

The exercise is the same for both folders:

- Run the pre-prepared

pt2ts.pyscript to save the net. - Inspect the code to see how it works:

- Both have a main program in

simplenet_fortran.f90. - Both have FTorch code extracted to a module

fortran_ml_mod.f90.badis in a single routine.goodis split into init, iter, and finalise.

- Both have a main program in

- Modify the Makefile to link to FTorch and build the codes.

- Time the codes and observe the difference.

Exercise 2 - solution

For a complete version of the example, see the Looping example.

Exercise 3: automatic differentiation

PyTorch has a powerful automatic differentiation engine called autograd that can be used to compute derivatives of expressions involving tensors.

We recently exposed this functionality in FTorch, allowing you to do this in Fortran, too. This is a key step facilitating online training in FTorch.

In this exercise, we walk through differentiating the mathematical expression \[Q = 3 (a^3 - b^2/3)\] using both PyTorch and FTorch.

Online training with FTorch

Multiple inputs and outputs

Supply as an array of tensors, innit.

- For more details see the MultiIO Example.

GPU Acceleration

- FTorch automatically has access to GPU acceleration through the PyTorch backend.

- When running

pt2ts.py, save the model on GPU.- Guidance provided in the file.

- When creating

torch_tensors set the device totorch_kCUDAinstead oftorch_kCPU.1 - To target a specific device supply the

device_indexargument. - CPU-GPU cannot avoid data transfer. Use

MPI_GATHER()to reduce. - Use

torch_tensor_toto transfer tensors between devices (and data types). - For more details see:

- the online documentation

- the GPU Example

Ongoing and future Work

- Online training

- UKCA (United Kingdom Chemistry and Aerosols) case study.

- Better integration into CESM and other codes

- Benchmarking against other solutions

- Benchmarking “ease of use” is hard

Thanks for Listening

For more information please speak to us afterwards, or drop us a message.

The ICCS received support from