Introduction to Neural Networks with PyTorch

NCAS Summer School 2025

ICCS/Cambridge

ICCS/Cambridge

Rough Schedule

- 9:00-10:30 - Neural Networks and Climate Application lecture

- 11-12:30 - Teaching/Code-along

Material

These slides can be viewed at:

The html and source can be found on GitHub. Follow this link:

Based on the workshop developed by Jack Atkinson and Jim Denholm:

V1.0 released and JOSE paper accepted:

Learning objectives

The key learning objective from this workshop could be simply summarised as: Provide the ability to develop ML models in PyTorch.

Specifically:

- provide an understanding of the structure of a PyTorch model and ML pipeline,

- introduce the different functionalities PyTorch might provide,

- encourage good research software engineering (RSE) practice, and

- exercise careful consideration and understanding of data used for training ML models.

With regards to specific ML content, we cover:

- using ML for both classification and regression,

- artificial neural networks (ANNs) and convolutional neural networks (CNNs)

- treatment of tabular data and image data

Part 1: Neural-network basics – and fun applications.

Stochastic gradient descent (SGD)

- Generally speaking, most neural networks are fit/trained using SGD (or some variant of it).

- To understand how one might fit a function with SGD, let’s start with a straight line: \[y=mx+c\]

Fitting a straight line with SGD I

- Question—when we a differentiate a function, what do we get?

- Consider:

\[y = mx + c\]

\[\frac{dy}{dx} = m\]

- \(m\) is certainly \(y\)’s slope, but is there a (perhaps) more fundamental way to view a derivative?

Fitting a straight line with SGD II

- Answer—a function’s derivative gives a vector which points in the direction of steepest ascent.

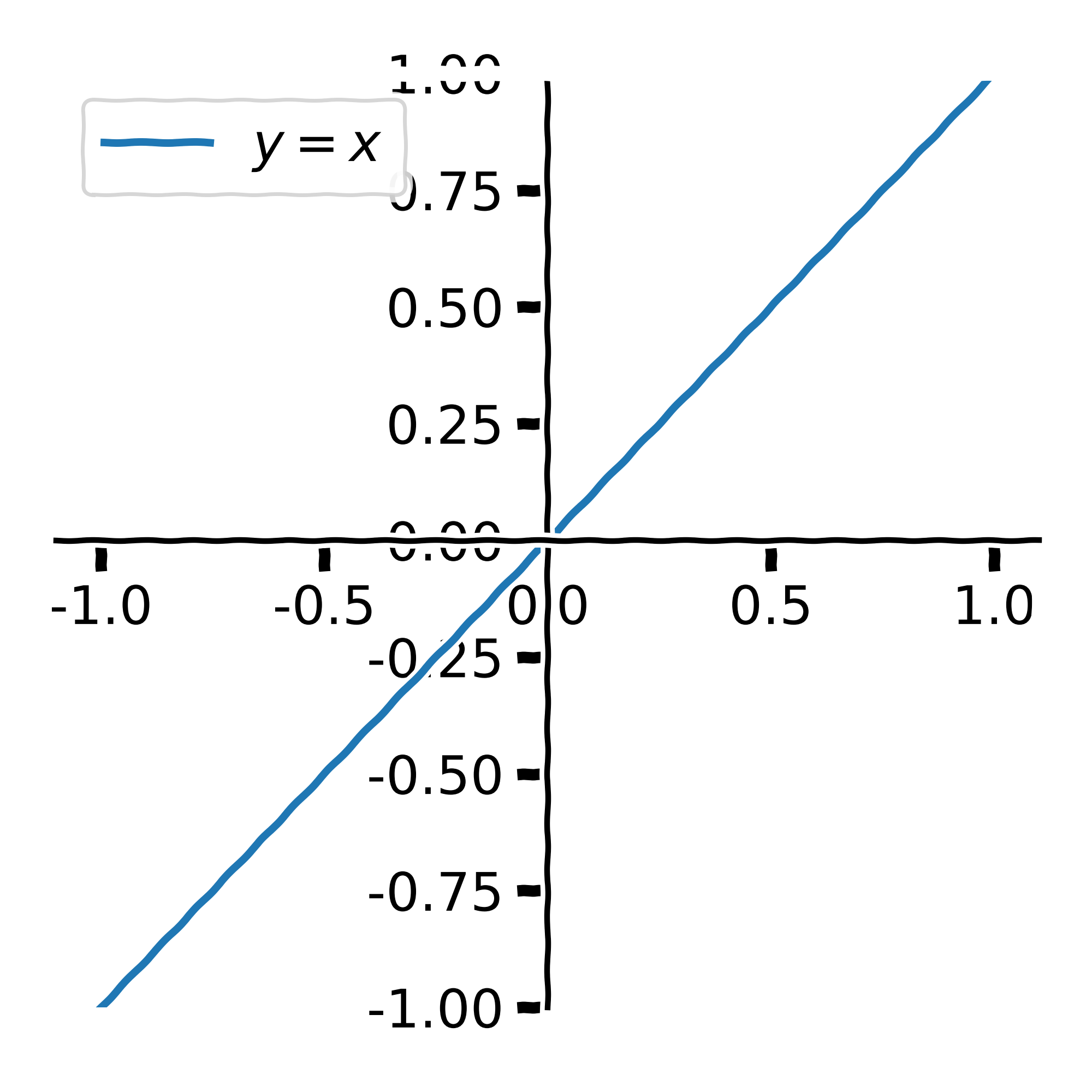

- Consider

\[y = x\]

\[\frac{dy}{dx} = 1\]

- What is the direction of steepest descent?

\[-\frac{dy}{dx}\]

Fitting a straight line with SGD III

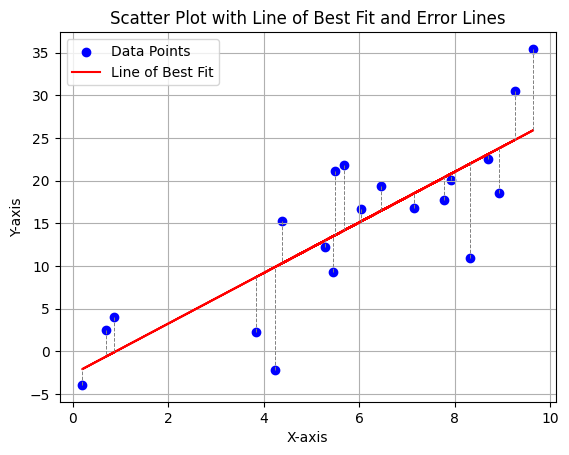

- When fitting a function, we are essentially creating a model, \(f\), which describes some data, \(y\).

- We therefore need a way of measuring how well a model’s predictions match our observations.

Fitting a straight line with SGD IV

- We can measure the distance between \(f(x_{i})\) and \(y_{i}\).

Fitting a straight line with SGD V

- Normally we might consider the mean-squared error:

\[L_{\text{MSE}} = \frac{1}{n}\sum_{i=1}^{n}\left(y_{i} - f(x_{i})\right)^{2}\]

Model: \(f(x) = mx + c\)

Data: \(\{x_{i}, y_{i}\}\)

Loss: \(\frac{1}{n}\sum_{i=1}^{n}(y_{i} - x_{i})^{2}\)

- We can differentiate the loss function w.r.t. to each parameter in the the model \(f\). \[ \begin{align} L_{\text{MSE}} &= \frac{1}{n}\sum_{i=1}^{n}(y_{i} - f(x_{i}))^{2}\\ &= \frac{1}{n}\sum_{i=1}^{n}(y_{i} - mx_{i} + c)^{2} \end{align} \]

Fitting a straight line with SGD VI

- Differential:

\[ \frac{\partial L}{\partial m} \;=\; \frac{1}{n}\sum_{i=1}^{n} 2\bigl(m\,x_{i}+c-y_{i}\bigr)\,x_{i}. \]

\[ \frac{\partial L}{\partial c} \;=\; \frac{1}{n}\sum_{i=1}^{n} 2\bigl(m\,x_{i}+c-y_{i}\bigr). \]

- This gradient is used to find the parameters that minimise the loss, thereby reducing overall error.

Update Rule

- We can iteratively minimise the loss by stepping the model’s parameters in the direction of steepest descent:

\[m_{n + 1} = m_{n} - \frac{dL}{dm} \cdot l_{r}\]

\[c_{n + 1} = c_{n} - \frac{dL}{dc} \cdot l_{r}\]

- where \(l_{\text{r}}\) is a small constant known as the learning rate.

Quick recap

To fit a model we need:

- Some1 data.

- A model.

- A loss function

- An optimisation procedure (often SGD and other flavours of SGD).

What about neural networks?

- Neural networks are just functions.

- We can “train”, or “fit”, them as we would any other function:

- by iteratively nudging parameters to minimise a loss.

- With neural networks, differentiating the loss function is a bit more complicated

- but ultimately it’s just the chain rule.

- We won’t go through any more maths on the matter—learning resources on the topic are in no short supply.1

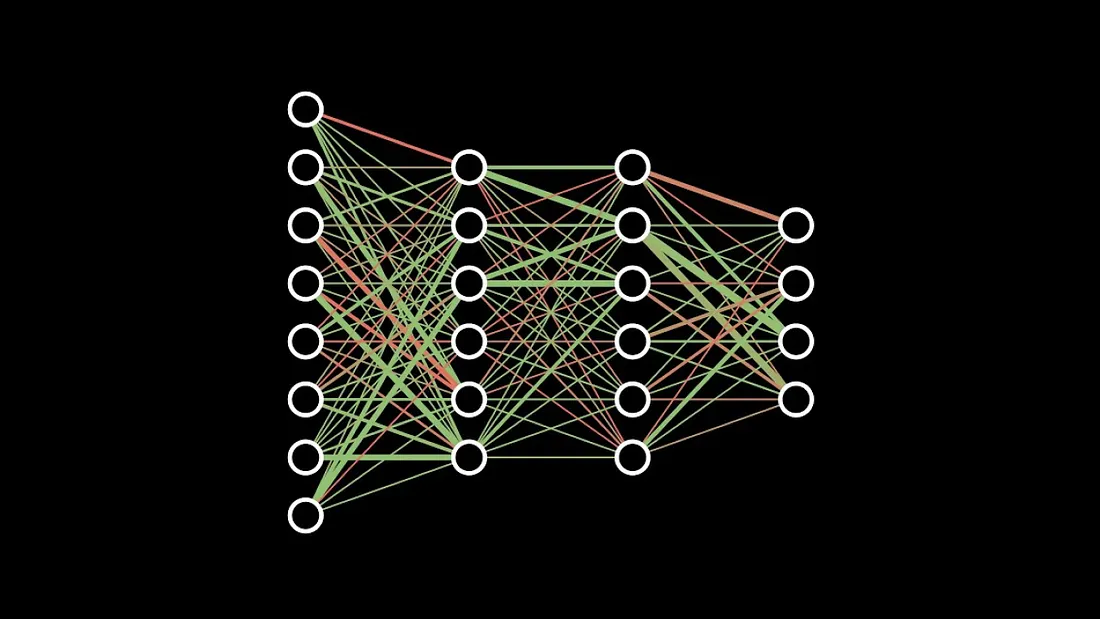

Fully-connected neural networks

- The simplest neural networks commonly used are generally called fully-connected neural nets, dense networks, multi-layer perceptrons, or artifical neural networks (ANNs).

- We map between the features at consecutive layers through matrix multiplication and the application of some non-linear activation function.

\[a_{l+1} = \sigma \left( W_{l}a_{l} + b_{l} \right)\]

- For common choices of activation function, see the PyTorch docs.

Image source: 3Blue1Brown

Uses: Classification and Regression

- Fully-connected neural networks are often applied to tabular data.

- i.e. where it makes sense to express the data in a table-like object (such as a

pandasdata frame). - The input features and targets are represented as vectors.

- i.e. where it makes sense to express the data in a table-like object (such as a

- Neural networks are normally used for one of two things:

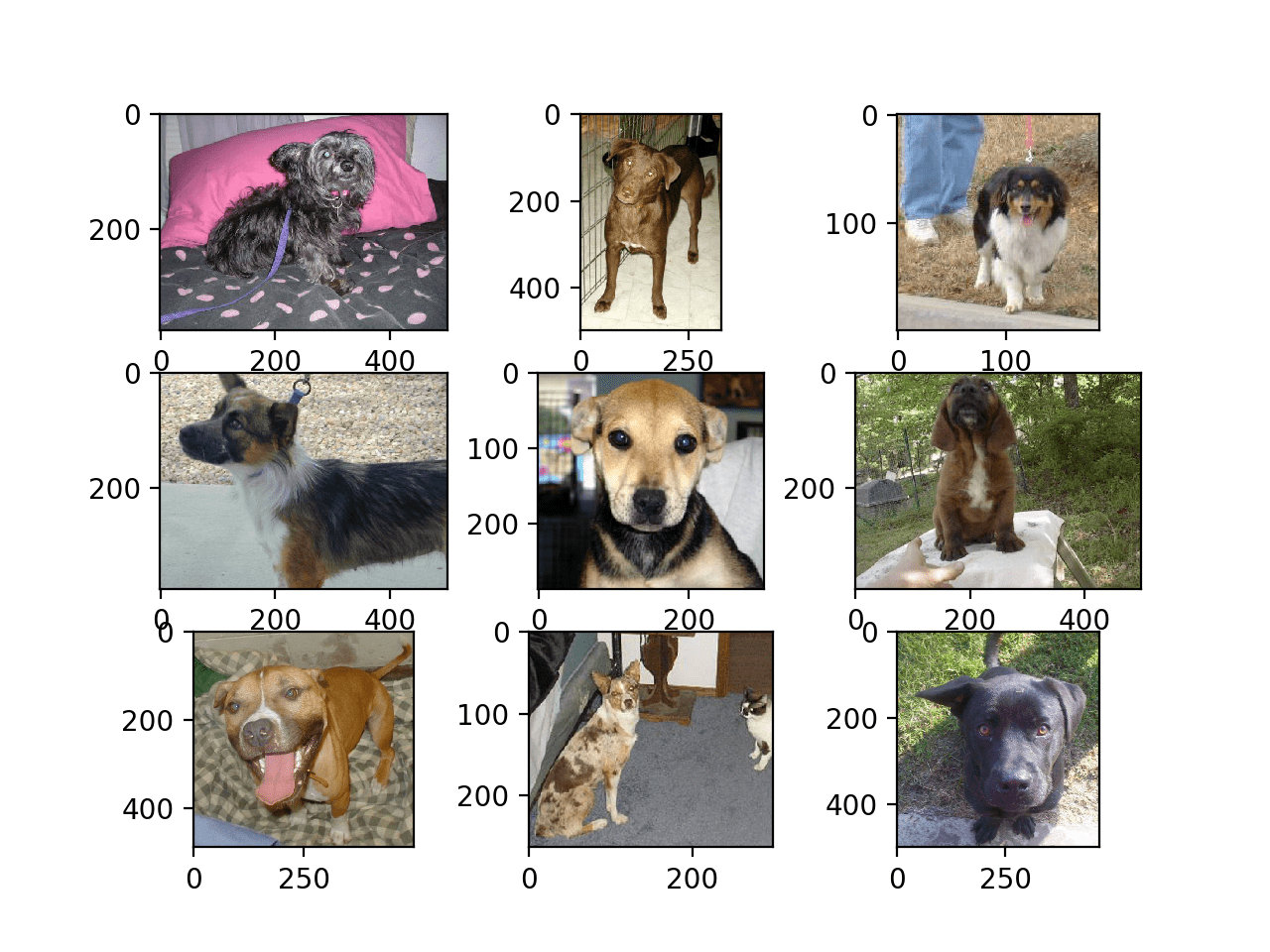

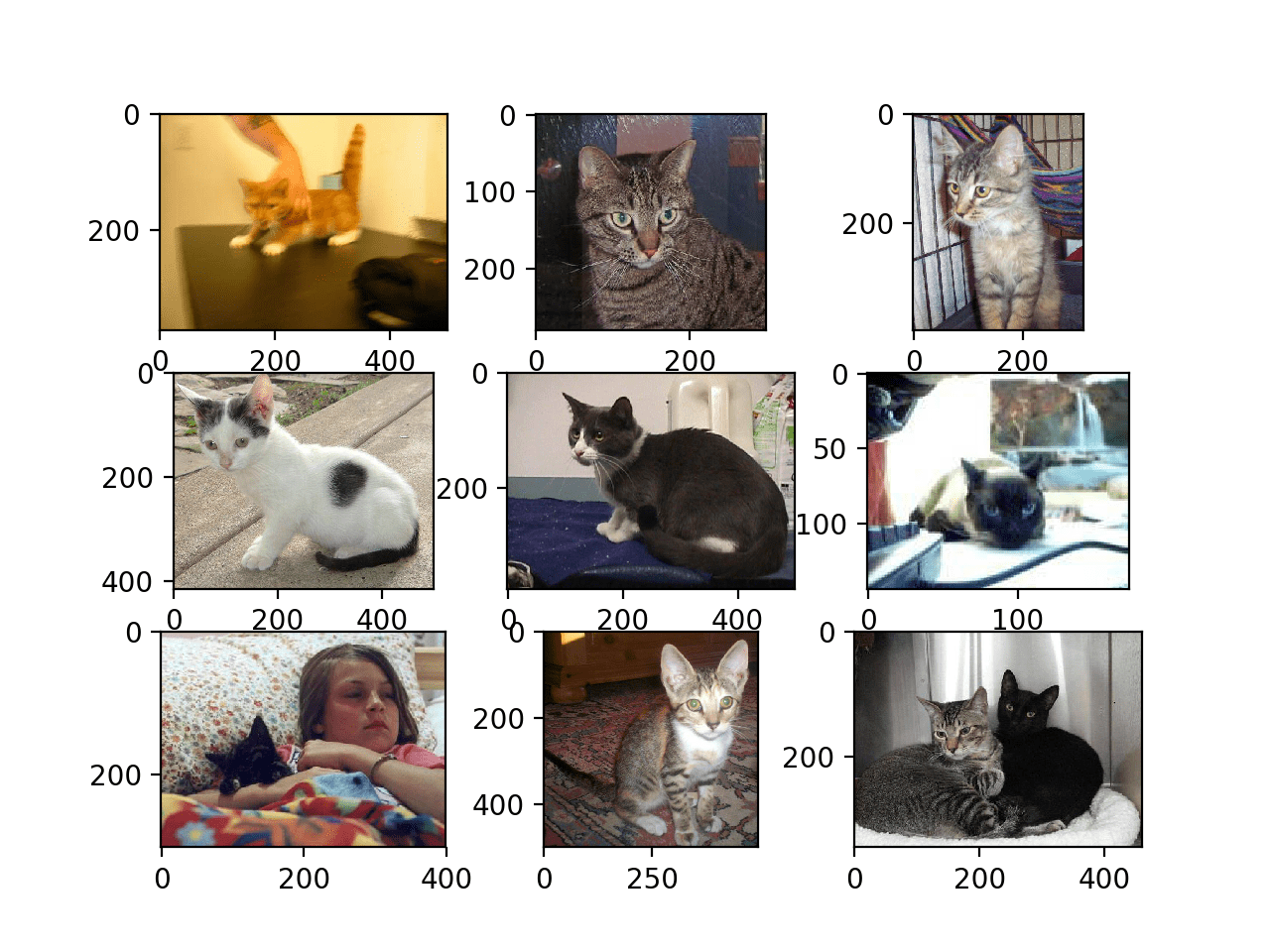

- Classification: assigning a semantic label to something – i.e. is this a dog or cat?

- Regression: Estimating a continuous quantity – e.g. mass or volume – based on other information.

Python and PyTorch

- In this workshop, we will implement some straightforward neural networks in PyTorch, and use them for different classification and regression problems.

- PyTorch is a deep learning framework that can be used in both Python and C++.

- There are other frameworks like Jax, Tensorflow, PyTorch Lightning

- See the PyTorch website: https://pytorch.org/

Datasets, DataLoaders & nn.Module

What a Dataset class does

- Provides a uniform API to your data

- Handles

- Loading raw files (images, CSVs, audio …)

- Train / validation / test split logic

- Transforms / augmentation per item

- Item retrieval so the rest of PyTorch can stay agnostic

Anatomy of a custom Dataset

class MyDataset(torch.utils.data.Dataset):

def __init__(self, root_dir, split="train", transform=None):

# 1️ load or download files / labels

self.paths, self.labels = load_index_file(root_dir, split)

self.transform = transform # 2️ save transformsThe constructor is where you gather file paths, download archives, read CSVs, etc.

__len__ & __getitem__

def __len__(self):

return len(self.paths) # total #samples

def __getitem__(self, idx):

img = PIL.Image.open(self.paths[idx]).convert("RGB")

if self.transform: # 3️ apply transforms

img = self.transform(img)

label = self.labels[idx]

return img, label # 4️ single exampleWith these two methods PyTorch knows how big the dataset is and how to fetch one record.

Using the custom dataset

The DataLoader at a glance

- Wraps any

Datasetin an iterable - Batches samples together

- Shuffles if asked

- Uses multiprocessing (

num_workers) to pre‑fetch data in parallel - Returns

(batch, labels)tuples ready for the GPU

Typical DataLoader code

Quick networks with nn.Sequential

mlp = torch.nn.Sequential(

torch.nn.Linear(784, 256), torch.nn.ReLU(),

torch.nn.Linear(256, 64), torch.nn.ReLU(),

torch.nn.Linear(64, 10)

)

out = mlp(torch.rand(32, 784)) # 32‑sample batchGreat for simple feed‑forward stacks when no branching logic is needed.

nn.Module overview

- The base class for all neural‑network parts in PyTorch

- You sub‑class, then implement

__init__(self): declare layersforward(self, x): define the forward pass

Declaring layers in __init__

class MyCNN(torch.nn.Module):

def __init__(self, num_classes=2):

super().__init__()

self.features = torch.nn.Sequential(

torch.nn.Conv2d(3, 32, 3, padding=1), torch.nn.ReLU(),

torch.nn.MaxPool2d(2),

torch.nn.Conv2d(32, 64, 3, padding=1), torch.nn.ReLU(),

torch.nn.MaxPool2d(2)

)

self.classifier = torch.nn.Linear(64*56*56, num_classes)The forward pass

def forward(self, x):

x = self.features(x) # conv stack

x = x.flatten(1) # N,…

x = self.classifier(x) # logits

return xOnly forward is needed – back‑prop is handled automatically.

Calling the model ≈ calling forward

model = MyCNN()

logits1 = model(images) # preferred ✔

logits2 = model.forward(images) # works, but avoidmodel(input) internally routes to model.forward(input) via __call__.

Key Take‑Aways

- Dataset = organized access to individual samples

- DataLoader = batching, shuffling, parallel I/O

nn.Module= reusable building block; override__init__&forwardmodel(x)is the idiomatic way to run a forward pass- Use

nn.Sequentialfor quick layer chains

Exercises

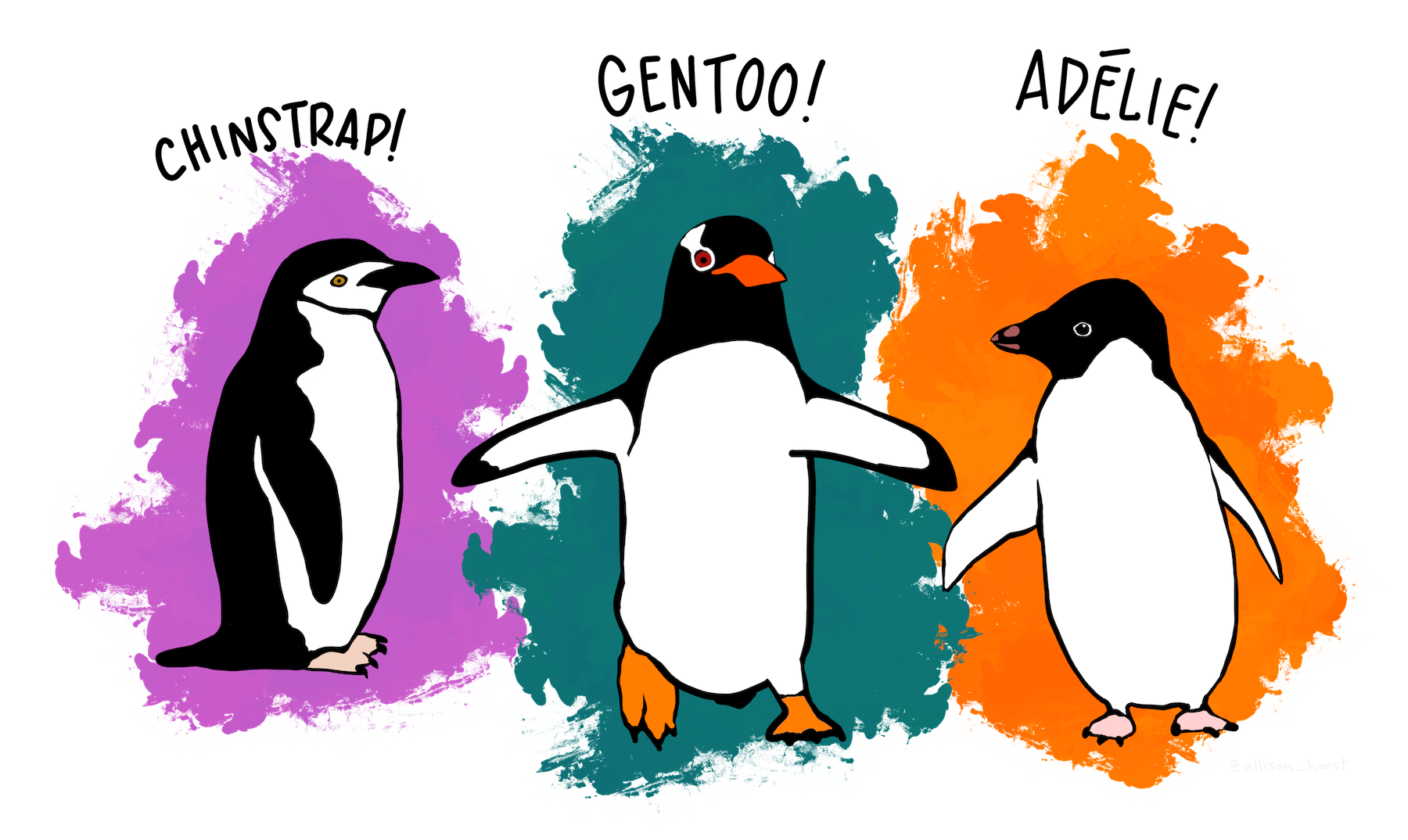

Penguins!

Image source: Palmer Penguins by Alison Horst

Exercise 1 – classification

- In this exercise, you will train a fully-connected neural network to classify the species of penguins based on certain physical features.

- https://github.com/allisonhorst/palmerpenguins

Exercise 2 – regression

- In this exercise, you will train a fully-connected neural network to predict the mass of penguins based on other physical features.

- https://github.com/allisonhorst/palmerpenguins

Part 2: Fun with CNNs

Convolutional neural networks (CNNs): why?

Advantages over simple ANNs:

- They require far fewer parameters per layer.

- The forward pass of a conv layer involves running a filter of fixed size over the inputs.

- The number of parameters per layer does not depend on the input size.

- They are a much more natural choice of function for image-like data:

Image source: Machine Learning Mastery

Convolutional neural networks (CNNs): why?

Some other points:

- Convolutional layers are translationally invariant:

- i.e. they don’t care where the “dog” is in the image.

- Convolutional layers are not rotationally invariant.

- e.g. a model trained to detect correctly-oriented human faces will likely fail on upside-down images

- We can address this with data augmentation (explored in exercises).

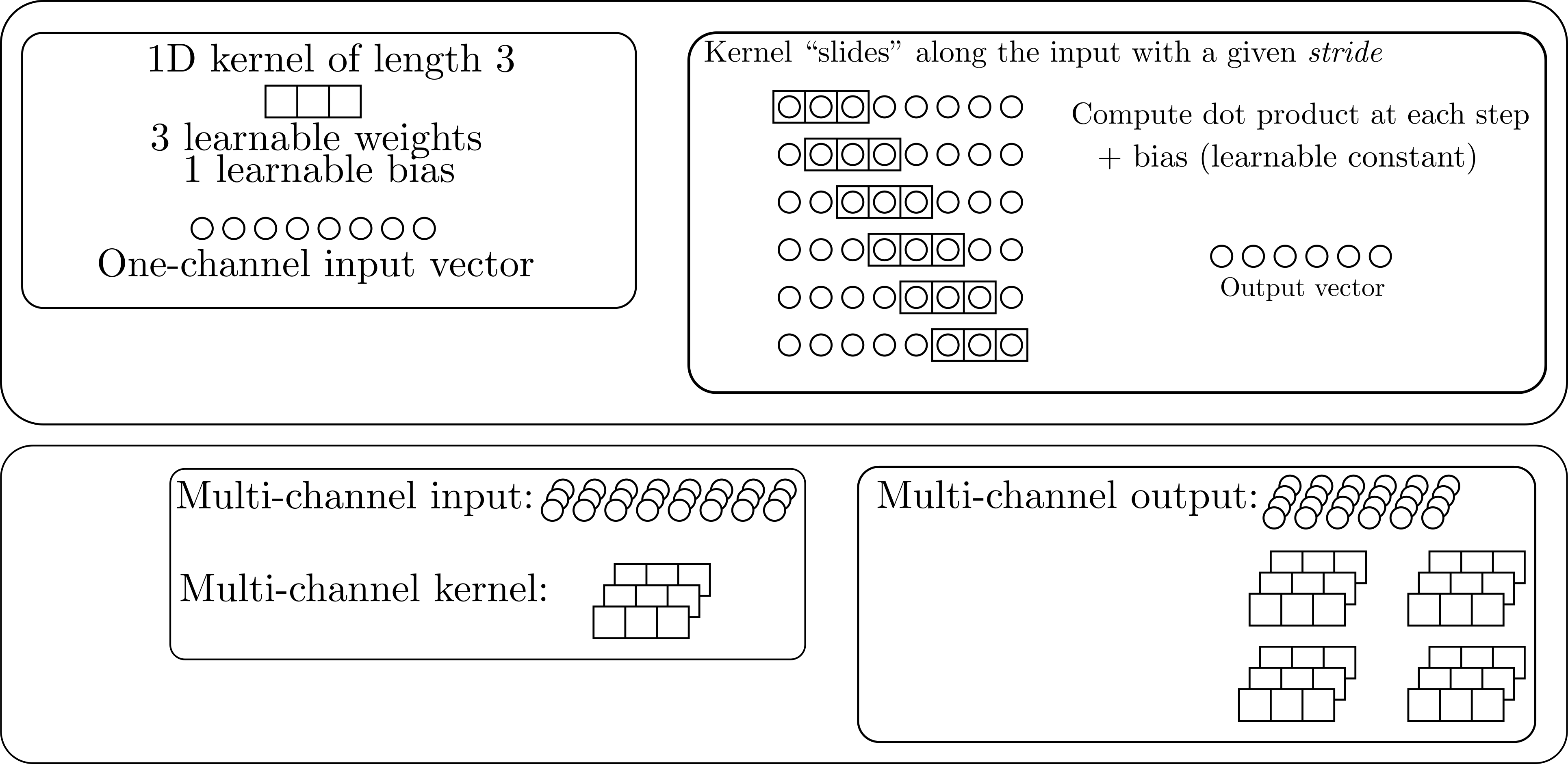

What is a (1D) convolutional layer?

See the torch.nn.Conv1d docs

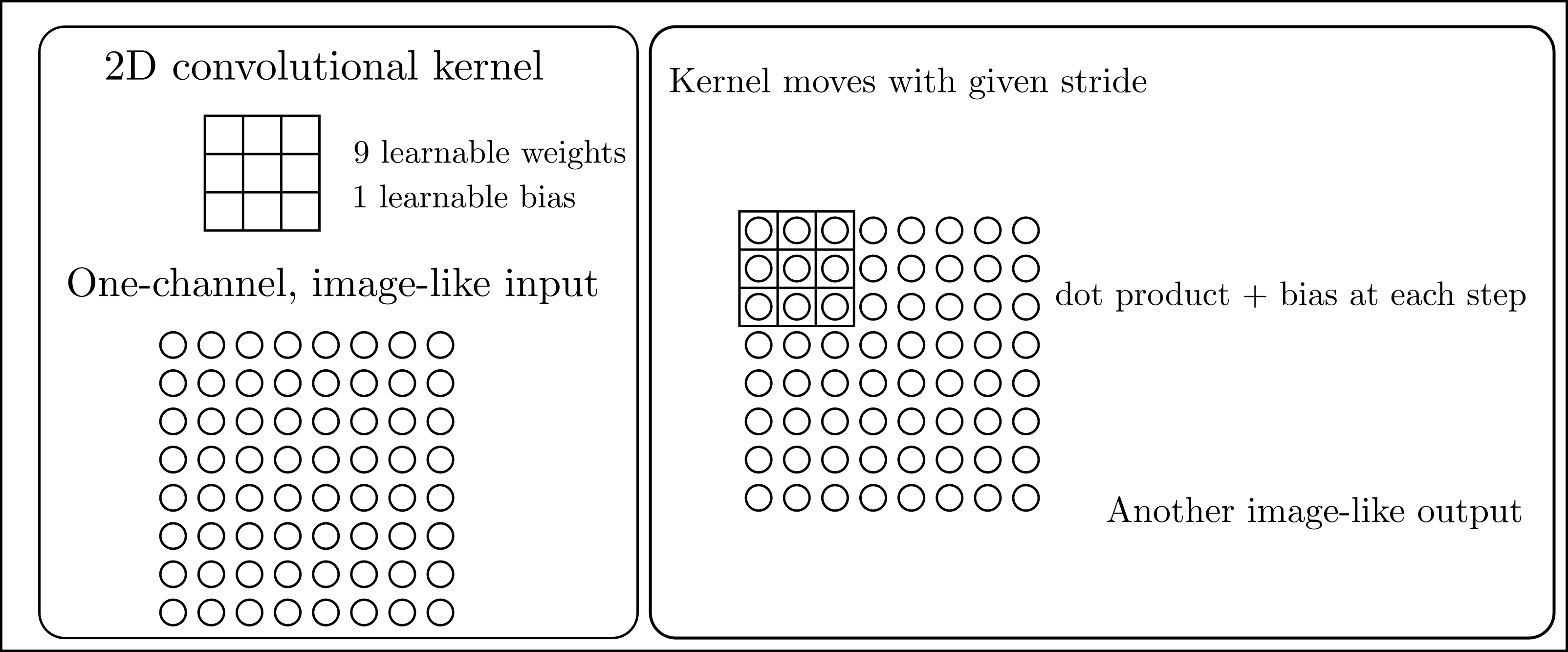

2D convolutional layer

- Same idea as in on dimension, but in two (funnily enough).

- Everthing else proceeds in the same way as with the 1D case.

- See the

torch.nn.Conv2ddocs. - As with Linear layers, Conv2d layers also have non-linear activations applied to them.

Typical CNN overview

- Series of conv layers extract features from the inputs.

- Often called an encoder.

- Adaptive pooling layer:

- Image-like objects \(\to\) vectors.

- Standardises size.

torch.nn.AdaptiveAvgPool2dtorch.nn.AdaptiveMaxPool2d

- Classification (or regression) head.

- For common CNN architectures see

torchvision.modelsdocs.

Exercises

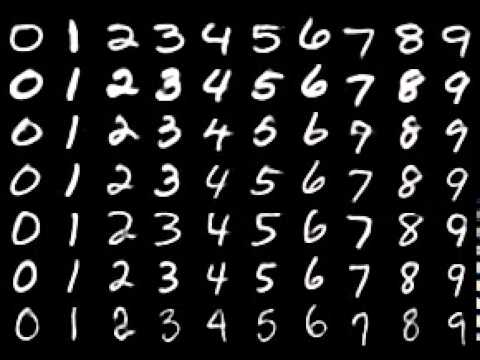

Exercise 1 – classification

MNIST hand-written digits.

- In this exercise we’ll train a CNN to classify hand-written digits in the MNIST dataset.

- See the MNIST database wiki for more details.

Image source: npmjs.com

Exercise 2—regression

Random ellipse problem

In this exercise, we’ll train a CNN to estimate the centre \((x_{\text{c}}, y_{\text{c}})\) and the \(x\) and \(y\) radii of an ellipse defined by \[ \frac{(x - x_{\text{c}})^{2}}{r_{x}^{2}} + \frac{(y - y_{\text{c}})^{2}}{r_{y}^{2}} = 1 \]

The ellipse, and its background, will have random colours chosen uniformly on \(\left[0,\ 255\right]^{3}\).

In short, the model must learn to estimate \(x_{\text{c}}\), \(y_{\text{c}}\), \(r_{x}\) and \(r_{y}\).

Contact

For more information we can be reached at:

Jack Atkinson